According to a new report published in the peer-reviewed science journal Nature by a group of Google AI researchers, deep learning can detect abnormal chest x-rays with the same accuracy as professional radiologists.

The deep learning system can assist radiologists in prioritizing chest x-rays and can also be used as a first-aid tool in emergency situations where expert radiologists are unavailable. While deep learning is nowhere close to replacing radiologists, the findings suggest that technology can assist enhance their output at a time when the globe is in desperate need of medical specialists. The report also demonstrates how far the AI research community has progressed in developing techniques that can lessen the risks associated with deep learning models while simultaneously producing work that can be improved in the future.

The advancements in medical imaging analysis powered by AI are undeniable. Hundreds of deep learning systems for medical imaging have now been approved by the FDA and other regulatory agencies across the world.

However, the majority of these models have been trained for a particular specific job, such as detecting evidence of a specific disease or condition in x-ray pictures. As a result, they’ll only be beneficial if the radiologist already understands what to look for. However, radiologists do not always begin by looking for a specific condition. It’s also incredibly difficult, if not impossible, to create a system that can detect every possible ailment.

According to Google’s AI researchers, the large range of possible CXR [chest x-ray] abnormalities makes it impractical to detect every possible condition by developing numerous distinct systems, each of which identifies one or more pre-specified disorders.

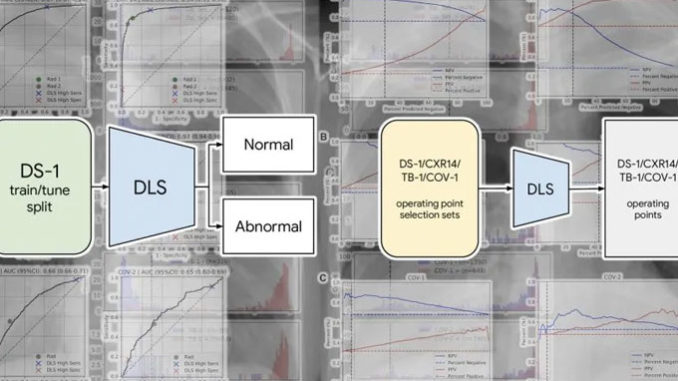

Their answer was to develop a deep learning system that can tell if a chest scan is normal or has clinically relevant results. Finding a balance between specificity and generalizability when defining the issue domain for deep learning systems is a difficult task. Deep learning models that can do very restricted tasks (e.g., recognizing pneumonia or fractures) at the risk of not generalizing to other tasks are on one end of the spectrum (e.g., detecting tuberculosis). On the other hand, some systems can answer a broad query but can’t handle more specific issues.

The Google researchers reasoned that abnormality detection may have a significant impact on radiologists’ work, even if the trained model didn’t identify specific diseases. According to the researchers, a trustworthy AI system for differentiating normal CXRs from aberrant ones can help with patient workup and management. For example, such technology could assist in deprioritizing or excluding normal cases, perhaps speeding up the clinical procedure.

Although the Google researchers did not specify the model they employed, the publication references EfficientNet, a family of convolutional neural networks (CNN) known for attaining state-of-the-art accuracy on computer vision tasks at a quarter of the computing cost of other models.

The B7 model, which is used to detect x-ray abnormalities, is the largest of the EfficientNet family, with 813 layers and 66 million parameters (though the researchers probably adjusted the architecture based on their application). The researchers did not employ Google’s TPU processors to train the model, instead opting for 10 Tesla V100 GPUs. The intensive work done to build the training and test datasets is perhaps the most noteworthy aspect of Google’s initiative. Deep learning engineers are frequently confronted with the problem of their models detecting incorrect biases in their training data. In one case, a deep learning system for skin cancer diagnosis had learned to detect the presence of ruler markings on skin instead of skin cancer. Models can also become sensitive to irrelevant elements such as the brand of camera used to take the photographs in some circumstances. More crucially, a trained model must be able to retain its accuracy across varied populations. The researchers employed six different datasets for training and testing to ensure that no harmful biases were introduced into the algorithm.

More than 250,000 x-ray scans from five Indian hospitals were used to train the deep learning model. Based on information taken from the outcome report, the examples were categorized as “normal” or “abnormal.”

To ensure that the model generalized to different regions, it was tested using new chest x-rays received from hospitals in India, China, and the United States To assess how the model would perform on diseases that weren’t in the training dataset, the test data includes x-ray scans for TB and Covid-19, two diseases that weren’t in the training dataset. Three radiologists independently checked and validated the accuracy of the labels in the dataset.

The labels have been made public to aid future research on deep learning models for radiology. “We are publishing our abnormal versus normal classifications from three radiologists (2430 labels on 810 images) for the publicly accessible CXR-14 test set to support the further development of AI models for chest radiography. The researchers add, “We feel this will be valuable for future work because label quality is critical for any AI study in healthcare.”

However, five years later, AI is still not close to displacing radiologists. Despite the fact that the number of radiologists has increased, there is still a critical shortage of radiologists around the world. A radiologist’s profession entails much more than simply examining x-ray scans.

The Google researchers write in their study that their deep learning model was successful in detecting abnormal x-rays with accuracy comparable to and in some cases better than, human radiologists. They do, however, point out that the actual usefulness of this technology comes when it is utilized to boost radiologists’ output.

The researchers put the deep learning system to the test in two simulated scenarios, in which it aided a radiologist by either helping prioritizing scans that were determined to be problematic or eliminating scans that were found to be OK. The use of deep learning with a radiologist in both situations resulted in a considerable reduction in turnaround time.